There is an accompanying video on the group's youtube channel:

Slides used in the video are here: [link].

Classifier Performance Is Not One Number

Many machine learning models can be considered classifiers - deciding whether an input belongs to one of two or more classes.The following shows an example of a model which classifies a patient as healthy or unwell with a disease.

How do we communicate a sense of this classifier's performance?

We could simply use a score of 4/5 because in testing it classified 5 people as healthy, but only 4 of the 5 were actually healthy. This seems reasonable.

However, we might care more about unwell people because healthy people aren't the problem that needs addressing. We could then say the score is 3/5 because of the 5 people it classified as unwell, only 3 were actually unwell.

Alternatively the greater concern might be avoiding sick people walking around the community who have been incorrectly told they are well. So 4/5 might actually be a better score, or perhaps 1/5 which better reflects the number of people incorrectly told they are well.

What this tells us is that one score is rarely sufficient to understand how well a classifier performs.

Confusion Matrix

We've just discussed several scenarios where different measures are useful for different tasks. These include the fraction incorrectly classified as well, and the fraction correctly classified as unwell.The above matrix, known as a confusion matrix, shows all four combinations of correct and incorrect classification:

- True Positives TP - actually well and correctly classified well

- False Negative FP - actually well but incorrectly classified unwell

- False Positive FP - actually unwell but incorrectly classified unwell

- True Negative TN - actually unwell and correctly classified unwell

These four numbers provide a fuller picture of how the classifier behaves. We can see the true positives and true negatives are higher than the false positive and false negatives. If we chose to, we could focus on an individual score if it was important, like the false negative (telling someone they are well when they are not).

It can be convenient to combine some of these four numbers into a score that is useful for a given scenario. The following are some very common scores:

- accuracy is $\frac{(TP+TN)}{(TP+TN+FP+FN)}$ and expresses the correct classifications as a fraction of all classifications.

- recall is $\frac{TP}{TP+FN}$ and expresses how many of the actually positive data are classified as positive.

- precision is $\frac{TP}{TP+FP}$ and expresses how much of the data classified as positive is actually positive.

Understanding these scores intuitively doesn't happen quickly, but happens through use. It is better to understand the plain English concepts and look these scores up when needed.

Looking at extreme examples can help develop intuitive understanding. The following shows a classifier which, given the same data, classifies only one person as healthy, and everyone else as unwell.

The classifier is not wrong in its healthy classification. However it has lumped almost everyone in the unwell class, which is intuitively wrong. We can consider this classifier as extremely cautious in assigning a healthy classification. We can see the precision is perfect because there are no mistakes in the healthy class. However the recall is very low because it has missed so many actually well people. As a combined score, the accuracy is half, which makes sense because overall it has got half of the classifications wrong.

The next picture shows a different classifier, which given the same data, just classifies everyone as healthy. It probably did no work at all and just lumped everyone in that category.

We can see this time the recall is high, perfect in fact. That's because the classifier doesn't miss any healthy people. But because it has classified unwell people as healthy, the precision is poor.

The confusion matrix is an excellent concise summary of how a classifier behaves. If we want to compare several classifiers, a visual approach is more manageable than trying to digest several 4x4 confusion matrices.

A good way of visualising classifier performance, specifically the information contained in a confusion matrix, is a ROC plot.

ROC Plots

A ROC plot simply plots the True Positive Rate against False Positive Rate. These are just the TP and FP normalised to the range 0 to 1:- TPR is $\frac{TP}{total P} = \frac{TP}{TP+FN}$

- FPR is $\frac{FP}{total N} = \frac{TP}{FP+TN}$

The TPR takes account of FN as well as TP, and similarly, the FPR takes account of TN as well as FP, and as such the two scores FPR and FPR reflect all four values in a confusion matrix.

As a side note, you might have expected ROC plots to use TNR and not FPR. The FPR became the standard for historical reasons. In any case FPR represents the same information as TNR because FPR = 1 - TNR.

The following shows several key points on the ROC plot. It is worth becoming familiar with them as they are a good mental reference to compare real world classifiers with.

A classifier that assigns everything negative has zero TPR, but also zero FPR. This represented as a point in the bottom left corner of the ROC square plot. It is worth remembering this is a useless classifier.

A classifier that assigns everything positive has a top TPR, and also a top FPR too. This useless classifier is plotted at the top right of the ROC plot.

A perfect classifier which makes no mistakes at all has a TPR = 1.0, and a FPR = 0.0. This sits at the top left of the ROC plot.

A useless classifier which randomly assigns the positive classification to half the data has a TPR of 0.5, and a FPR of 0.5. This sits in the middle of the plot.

These examples show us that the useless classifiers seem to sit on the diagonal from the bottom left to the top right, and the perfect classifier sits at the top left.

This is correct. The following shows additional useless classifiers which randomly assign a positive classification for 30% and 80% of data. Whenever TPR = FPR it means the classifier is not using any useful information to assign classifications.

If that diagonal line is where useless classifiers sit, and the ideal classifier is at the top left, it means we want good classifiers to be above and to the left of points on that diagonal.

We can see that moving a point up means increasing the true positive rate. This is good. Moving to the left means reducing the false positive rate. This is also a good thing. Doing both is even better!

The following ROC plot shows the three classifiers we discussed earlier.

We can see that the moderately good classifier is in the top left part of the ROC plot. It's not an excellent classifier and that's why it is not closer to the top left corner.

We can also see the classifier that assigns all data to be positive at the top right. It is on the "useless' line as expected.

The very bad classifier which only managed to identify one positive instance is at the bottom left very close to the corner. It's not perfectly bad, but it is pretty bad!

We've seen how ROC plots make is easy to see and compare the performance of classifiers.

Tuning Classifiers

Many classifiers can be tuned or configured. These might be simple settings for thresholds, or more sophisticated changes like the number of nodes in a neural network.Altering these settings changes the performance of the classifier. Sometimes the change is simple, but sometimes it is not.

The following shows our well/unwell classifier with a parameter that can be tuned.

It is good practice to run experiments with varying settings and record how well the classifier did on test data. Each test will result in a confusion matrix which we can plot on a ROC chart.

In the above illustration we can see several points from several experiments. They dots form a curve, called a ROC curve.

It is easy to see that as the setting is changed from on extreme to another, the classifier starts badly, improves, and then gets worse again. We can read off the point which is closest to the top-left ideal corner, and this will be the ideal setting for that classifier.

Easy!

Classifiers With Scores

Sometimes we can't adjust or tune a classifier. In these cases we can sometimes look behind the classification and find a value that led to that classification, often a probability. For example, neural networks have output nodes that have continuous values, often in the range 0.0 to 1.0, and these can be interpreted as the probability of a positive classification.We can still take advantage of the ROC method for finding an optimal classifier. We do this by ranking the test data by score, for example descending probability.

We then use a threshold value above which we say the classifier should produce a positive classification. Of course this might not match the actual classes of the data. We produce a confusion matrix for the data above that threshold, and plot the point on the ROC curve. We repeat this for different thresholds, each time producing a confusion matrix, which leads to a point on a ROC plot.

As we lower the threshold the more we classify points as positive. This also increase the false positives too and we move towards the top right of the ROC plot.

The resulting dots on a ROC plot allow us to easily read off the ideal threshold which balances true positive and false positives.

In some cases, this method allows us to find a threshold that is close to but not exactly 0.5, even when out intuition tells us it should be 0.5.

Area Under The Curve

ROC plots are rich with information, and we should resist the temptation to reduce their information into a single value. However, a score known as area under the curve or AUC, is commonly used. It is is simple the area under a ROC curve.We can intuitively see that the top purple curve represents a better performing classifier or classifiers because all the points are closer to the top left. The area under that purple is larger than the green curve.

It is easy to conclude that a larger AUC means better classifier. However, it is worth being aware that this single value can hide important features of a ROC curve.

Here we can see the green curve actually gets closer to the top left than the purple curve, and so is a better classifier when configured properly. Simply comparing AUC would have told us that the purple classifier was better.

Class Skew?

A common concern is whether an imbalance between actual positive and negative data might skew the ROC curves or cause them to become inaccurate. This is a reasonable concern as many statistical techniques are in fact impacted by unbalanced classes.ROC curves aren't affected by class imbalance because the TPR is only calculated from actual positive data, and the FPR from actual negative data. If either of these was calculated from both numbers of positive or negative instances, an imbalance would affect the TPR and FPR values.

Let's look at an example to see this in action. The following shows the previous test data and confusion matrix.

The TPR is 4/6 and the FPR is 1/4.

If we now double the population of healthy people, that is, double the number of actually positive data, we have a new confusion matrix.

Doubling the population of actually well people has resulted in a doubling of true positives, and also the number of false negatives. The FP and TN counts are not affected at all because we still have 4 actually unwell people, and the same classifier treats them just as before.

Since TPR is a ratio, we have 8/12 which is the same as 4/6.

So class imbalance doesn't affect TPR and FPR and so ROC curves are not affected.

This is an important feature because it allows us to easily compare classifiers tested on different data sets.

Finding The Best Classifier - Simple

Up to this point we've seen how easy it is to read off the optimal classifier on a ROC plot. The intention was to underline this ease and simplicity.Let's revisit this with a challenging ROC plot. Which of the two classifiers is better?

Both the green and purple classifiers seem to have points close to the ideal top left. In fact, their distance to the top left corner seems equal. How do we decide which is best?

The green classifier has a lower TPR but also a lower FPR at it's optimal region. The purple classifier has a larger TPR but also a higher FPR too.

We have to decide whether false positives are more costly to our scenario or not. In some situations, maximising the true positive rate no matter what is best. In other cases, we want to avoid false positives because the cost is too high, for example allowing someone to walk around the community with a dangerous infectious disease.

ROC curves don't take this decision away from us, they bring them to the fore and allow us to make informed decisions.

Finding The Best Classifier - Costs of False Positives and False Negatives

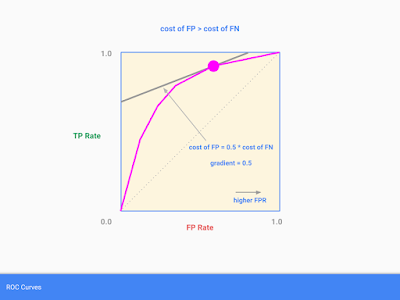

Here we'll look again at the finding the best classifier by looking more closely at the relative costs of false positives and false negatives.Let's start with the simple case where the cost of a false positive is the same as a false negative.

Here we move a line with gradient 1 down from the top left corner until it touches the ROC curve. The point at which it touches the curve is considered the optimal classifier, or configuration of a classifier. This is what we have been doing before, but without saying we were using a line with gradient 1.

Now consider what happens if we know the cost of a false positive is twice the cost of a false negative. We want to be more cautious now and not accept as many false positives (because they cost more now). That means moving to the left on the ROC curve for our optimal point.

In fact, if you did the algebra, you'd find the slope of the line we use to touch the ROC curve is 2, to reflect the double cost of FP.

Similarly, if the cost of false positives was half that of false negatives, we'd use a line with gradient 0.5.

The false positive rate is higher now, and that's ok because they're cheap.

We might be tempted to say that the gradient is simply $\frac{cost of FP}{cost of FN}$, as we've seen so far.

Actually, the class balance or imbalance, does matter now. If we have a very small number of actual positives in the data, then even if there is a higher cost for false positives, they won't occur large numbers. So our gradient also needs to take into account the class balance.

$$gradient = \frac{neg}{pos} * \frac{cost_of_FP}{cost_of_FN}$$

The video tutorial linked above includes an example which includes both factors.

New Classifiers From Old

Have a look at the following ROC plats of two tuneable classifiers.The optimal configuration for the green classifier has a moderate TPR but a low FPR. The optimal point for the purple classifier has higher TPR but higher FPR too. On their own we can't have a TPR-FPR combination that's somewhere between the two.

Can we combine the two classifiers in some way to achieve an intermediate performance? MJ Scott (paper linked below) proves that we can do this.

In the plot above we have the green classifier A, and purple classifier B. Scott proves that we can combine A and B to create a classifier C which has a TPR-FPR that lies on the line between the two. This is done by randomly using A and B on data instances in a proportion that matches how far along the line we wan to be. Clearly using A lots will mean we're closer to A. The following shows C which is halfway between A and B, achieved by using A and B half the time at random.

This can be even more useful than interpolating performance.

The following shows a dataset which a simple linear classifier will find hard to partition well.

There are two classes, shown as blue and green, and the task of finding a threshold to cleanly separate the two is impossible.

Any attempt at a good threshold has large amounts of the wrong class on each side. The following shows the ROC plot for a classifier as this threshold varies.

We can see as the threshold rises the TPR stops rising as FPR increases. At the halfway point, the classifier is as useless as a random classifier. Continuing on we have a rise in TPR but also a large FPR too. We can see straight away the ROC curve does not go anywhere near the ideal top left corner.

By picking the best points on this curve, we can combine then to achieve a classification rate that is closer to the ideal corner and not possible with the individual classifiers.

If you're not convinced this works, the video includes a worked example with new TPR and FPR rates worked out for a combined classifier.

The following is based on a plot showing experimental results from Scott's paper.

This example and illustrations are taken from Scott's paper.

Summary

The key benefits of ROC analysis are:- An easy to read and interpret visual representation of classifier performance.

- An easy visual way to compare classifiers, even different kinds of classifiers.

- Independence from class balance allows comparison of classifiers tested on different data.

- Makes finding an optimal classifier or configuration easy, even when we care about costs of classifier errors.

- Combine classifiers to make one that can perform better than individual ones.

Further Reading

- ROC and AUC, Clearly Explained, great accessible video - https://www.youtube.com/watch?v=4jRBRDbJemM

- Trade-off Between Sensitivity and Specificity - https://www.youtube.com/watch?v=vtYDyGGeQyo

- Confusion Matrix in ML - https://www.geeksforgeeks.org/confusion-matrix-machine-learning/

- An Introduction To ROC Analysis, one of the best and authoritative overviews - http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.98.4088&rep=rep1&type=pdf

- MLWIki ROC Analysis - http://mlwiki.org/index.php/ROC_Analysis

- ROC, useful discussion on costs of classifier errors - http://www0.cs.ucl.ac.uk/staff/W.Langdon/roc/

- MJ Scott's maximum realisable performance using multiple classifiers - http://mi.eng.cam.ac.uk/reports/svr-ftp/auto-pdf/Scott_tr320.pdf